Today, I am rebooting this thing after some difficult times. I investigate what I have done, and what needs to be developed/written.

Implementation

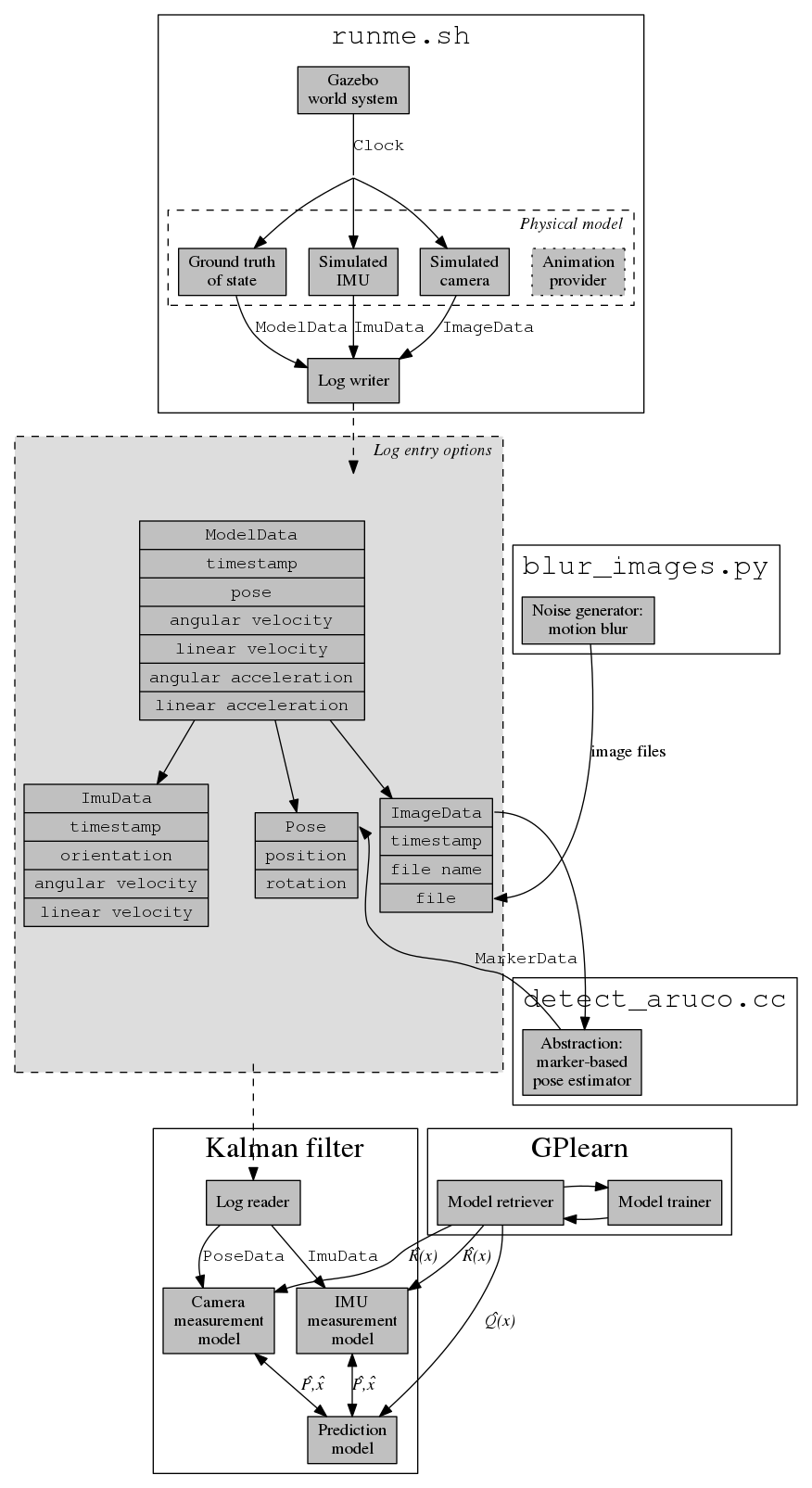

First off: implementation. Below you will see a detailed block diagram of the whole system. Each cluster describes a separate step in the workflow of the system. If there is an executable file corresponding with that process, the cluster’s title is that file name. A full discussion will follow per cluster.

runme.sh

This is the part that generates data. Everything in this is written to cooperate with Gazebo, the physics simulator tightly connected with ROS. The Gazebo world system, among other things, provides a common clock signal for all other data providers. The model plugin in model_control_plugin.cc provides the animation and calls to the logging of all related information: ground truth, IMU and camera data.

To do

Must do: Nothing. Optional:

- Multiple different animations.

Log entry options

Logs are written with C++ serialization library cereal. Each entry contains the following information:

gazebo::common::Time timeModelDatastores ground truth data of the model; no noise is added to this, ever.gazebo::common::Time timestampPose poseEigen::Vector3d positionEigen::Quaternion rotation

Eigen::Vector3d angularVelocityEigen::Vector3d linearVelocityEigen::Vector3d angularAccelerationEigen::Vector3d linearAcceleration

std::variant<ImageData, ImuData, Pose> field

ImageData and ImuData are defined as follows:

ImuDatastores data generated by Gazebo’s IMU.gazebo::common::Time timestampEigen::Quaternion orientationEigen::Vector3d angularVelocityEigen::Vector3d linearAcceleration

ImageDatastores the actual image frame as a file, for easier processing of the data.gazebo::common::Time timestampstd::string name

Poserepresents the pose of the system, transformed to the common coordinate frame of the system.

To do

Must do: Nothing. Optional: Nothing.

blur_images.py

The engine of Gazebo does not know about motion blur. Because it is essential to have in my project (one point is that the camera is not omnipotent and needs additional info from the IMU; without motion blur, this does not hold), I have to provide it myself.

blur_images.py reads and modifies the files, based on alphabetical file order. There are two blurring methods (I need citations!) which can be parameterized over command line.

To do

Must do:

- Find citations for these blurring methods.

Optional: Nothing

detect_aruco.cc

To estimate the camera’s pose, I use Aruco markers. For this, I read the log file, and write a new one back. Image data is filtered out, processed by the Aruco detector, and replaced with information about the camera’s pose at some timestamp.

I remember that the coordinate system outputted did not coincide with the coordinate system of the recorded ground truth system. More investigation is needed to get this right.

To do

*Must do:

- Let output coordinate system match with ground truth coordinate system.

Kalman filter

This is Bleser’s original model. This needs a LOT of work.

To do

Must do:

- Make an automatical data provider. Log output generated from each log entry, generated by Gazebo.

- Find out how to translate Bleser’s camera measurement model to my setting.

- Test each of the IMU measurement models.

- Test each of the prediction models.

Optional: Too far in the future to know.

GPlearn

This has no implementation by me at all.

To do

Must do:

- Read up on the method. How does the information flow exactly?

- See if there are Gaussian Process tools already available, and how to exchange data.

Writing

Nothing has happened, ever. I tried writing, but got stuck on the first sentence every time. I also made myself go over the layout over and over again. I really have to stop doing that, as it is not important.