Thesis intro

For my Master’s thesis I will investigate marker-less tracking of a head-mounted display with a particle filter for augmented reality applications. Sensor fusion will be applied to an inertial measurement unit and a stereocamera.

Augmented reality (AR) research tries to fuse a user’s perception of the real world together with another virtual world. This might be done for one’s hearing, smell or taste, but most often the focus is on augmenting what is seen and felt. In my research, I will focus on visual augments which will be presented by a head mounted display (HMD).

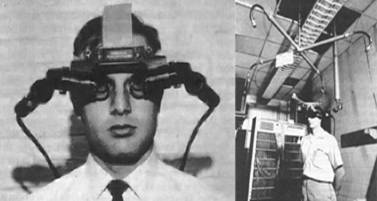

Examples of head-mounted displays. Top: The Sword of Damocles, a virtual reality system with the first HMD, comes from the 1960s. Bottom: My supervisor Toby wearing one of Cybermind’s toys research devices.

Adding the virtual objects to the display is not the biggest challenge; integrating them as if they really belong in that setting and allowing the user to interact with them is the hard part. One such challenge is knowing the 3D pose of the system in the real world, such that the augments can be placed on a static real-world position. Keeping this model up-to-date is essential to sustain the user’s belief of a really fused percept.

The AIBO ERS-7’s charger came with two markers, so that the robot could determine its distance and orientation with respect to the charger. Image source: Conscious Robotics.

To make this problem (slightly) easier, the real-world environment can be prepared with fiducial markers. Tracking your position in that way is a solved problem, and the technology is used in consumer products, such as the Nintendo 3DS’s AR cards.

A big disadvantage of this approach is the need for preparation; new environments have to be enhanced with markers before the augments can be provided. When the target of your augments is mobile, a marker should be attached to it. In many cases this is infeasible or undesired, such as in many medical and military applications.

Marker-less tracking

Earlier in my Master’s study I have done some projects on self-localization within the RoboCup Standard Platform League (SPL), which resulted in this and this project reports and a publication. RoboCup SPL is a league of robotic soccer, where all robots of all teams are made of the same hardware. The league uses the small NAO robot, and play on a field with set dimensions and colors. Finding your pose on the field is done without any true markers; while there are specific landmarks, they are unique or result in only a little gain of information. Placing markers in or around the field (such as team mates with easy-to-recognize shirts) is seen as cheating.

Random dudes doing science with a NAO H25. This is the small humanoid which is used in RoboCup SPL competitions. Image source: Aldebaran Robotics.

However, in the SPL scenario, the map is already known. In an unknown environment, the paradigma of simultaneous localization and mapping (SLAM) is more appropriate. There is a lot of literature on SLAM, mainly coming from robotics. In both the solely localization and SLAM problems, the robot makes an estimate of its displacement based on its performed action („according to my wheel odometry, I moved one meter straight ahead, so I probably moved that much”). Depending on the mode of movement (precision of odometry) and the environment (for instance slipperiness, obstacles), this estimate can be off. This estimate is enhanced by observations of other sensors, such as laser rangefinders and cameras.

Sensors

In the case of AR, no estimate of odometry is available to the system; there is no sufficient predictor available to model the user’s movement without extra sensors. Adding an inertial measurement unit (IMU) to the sensors of the HMD, and we might be able to provide some basic information as input. IMUs contain an accelerometer, gyroscope and magnetometer, which provides a single reading by means of sensor fusion. A nice introductory talk is available on YouTube. These sensors provide you with accurate measurements of acceleration (accelerometer) and rotation (gyroscope). The magnetometer lets you correct the rotational drift.

Further observations will be made with two cameras for stereovision. Together with the odometry this should allow for pretty accurate pose estimates. How all this information is going to be combined is my main topic of research. This will most likely be done with a marginalized particle filter. Directly reusing the IMU observations should be avoided, but we will investigate a way of using this data optimally in this stage as well.

Thesis details

Because of university requirements, I have several supervisors:

- Toby Hijzen is an employee of Cybermind, a Dutch company specializing in high-end HMDs. Toby is my daily supervisor. He is based in Delft University of Technology’s Biorobotics Lab (DBL).

- Arnoud Visser is the supervisor from the Universiteit van Amsterdam, where I am doing my Master’s study in Artificial Intelligence. As a member of the Intelligent Systems Lab Amsterdam (ISLA), he has supervised several projects ever since my Bachelor in AI, and I am glad to work with him.

- Pieter Jonker is DBL staff member.

References

-

Hideyuki Tamura.

Steady steps and giant leap toward practical mixed reality systems

and applications.

In Proceedings of the International Status Conference on Virtual

and Augmented Reality, pages 3–12, Leipzig, Germany, November 2002.

[ bib | .pdf ]

@inproceedings{tamura2002steady, title = {Steady steps and giant leap toward practical mixed reality systems and applications}, author = {Tamura, Hideyuki}, booktitle = {Proceedings of the International Status Conference on Virtual and Augmented Reality}, address = {Leipzig, Germany}, pages = {3--12}, month = nov, year = {2002}, url = {http://www.rm.is.ritsumei.ac.jp/~tamura/paper/vr-ar2002.pdf} } -

Thomas Schön, Fredrik Gustafsson, and Per-Johan Nordlund.

Marginalized particle filters for mixed linear/nonlinear state-space

models.

IEEE Transactions on Signal Processing, 53(7):2279–2289,

2005.

[ bib | DOI ]

@article{schon2005marginalized, title = {Marginalized particle filters for mixed linear/nonlinear state-space models}, author = {Sch\"on, Thomas and Gustafsson, Fredrik and Nordlund, Per-Johan}, journal = { {IEEE} Transactions on Signal Processing}, volume = {53}, number = {7}, pages = {2279--2289}, year = {2005}, publisher = {IEEE}, doi = {10.1109/TSP.2005.849151} } -

Amogh Gudi, Patrick de Kok, Georgios Methenitis, and Nikolaas Steenbergen.

Feature detection and localization for the RoboCup Soccer

SPL.

Project report, Universiteit van Amsterdam, February 2013.

[ bib | .pdf ]

@techreport{gudi2013feature, title = {Feature Detection and Localization for the {R}obo{C}up {S}occer {SPL}}, author = {Gudi, Amogh and de Kok, Patrick and Methenitis, Georgios and Steenbergen, Nikolaas}, type = {Project report}, institution = {Universiteit van Amsterdam}, month = feb, year = {2013}, url = {http://www.dutchnaoteam.nl/wp-content/uploads/2013/03/GudiDeKokMethenitisSteenbergen.pdf} } -

Patrick M. de Kok, Georgios Methenitis, and Sander Nugteren.

ViCTOriA: Visual Compass To Orientate Accurately.

Project report, Universiteit van Amsterdam, July 2013.

[ bib | .pdf ]

@techreport{dekok2013victoria, author = {de Kok, Patrick M. and Methenitis, Georgios and Nugteren, Sander}, title = { {ViCTOriA}: {V}isual {C}ompass {T}o {O}rientate {A}ccurately}, type = {Project report}, institution = {Universiteit van Amsterdam}, month = jul, year = {2013}, url = {http://www.dutchnaoteam.nl/wp-content/uploads/2013/07/ViCTOriA.pdf} } -

Georgios Methenitis, Patrick M. de Kok, Sander Nugteren, and Arnoud Visser.

Orientation finding using a grid based visual compass.

In 25th Belgian-Netherlands Conference on Artificial

Intelligence (BNAIC 2013), pages 128–135, November 2013.

[ bib ]

@inproceedings{methenitis2013orientation, author = {Methenitis, Georgios and de Kok, Patrick M. and Nugteren, Sander and Visser, Arnoud}, title = {Orientation finding using a grid based visual compass}, booktitle = {25th Belgian-Netherlands Conference on Artificial Intelligence (BNAIC 2013)}, pages = {128-135}, location = {Delft University of Technology, the Netherlands}, month = nov, year = {2013}, url = {https://staff.fnwi.uva.nl/a.visser/publications/GridBasedVisualCompass.pdf} }