Now that the camera is triggered by the IMU and I implemented the first model of Bleser et al., I really need to obtain the extrinsic multi-sensor calibration. Otherwise I can’t test that first model.

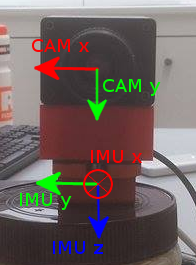

Sensor setup and coordinate systems. I noticed I never uploaded an image of this.

I have tried Kalibr again (see Furgale et al.). This gave me a different issue than before. After „initializing a pose spline”, it prints a ROS fatal message: [FATAL] Failed to obtain orientation prior!. I had contact with the authors about this. They noted that the camera often lost track of the calibration target, and when it did find the target, reprojection of the corners was messy. They suggested to 1. keep the calibration targets in the camera’s field of view, 2. optimize lighting conditions, and 3. get better intrinsic camera calibration parameters. Solutions to each:

- Done.

- I aimed two construction lights on the calibration target, and turned the construction lights around the football field of our RoboCup lab on. This second set of lights gives a more diffuse light source.

- I used the camera calibration tool that comes with Kalibr. I decided to use the

pinholecamera model andradtannoise model.

Other extrinsic multi-sensor calibration methods that seem interesting, to be investigated in this order, and in parallel with the email contact with the Kalibr team:

- Lobo and Dias’s work. Although it requires a turntable and specific placement of the sensors, and is described as „labor intensive” by Hol, I do recall that they had a publicly available implementation. Haven’t found it yet.

- Kelly and Sukhatme and Mirzaei and Roumeliotis use Kalman Filter based calibration methods.

- Hol uses a „grey-box” method.

But if I can’t reduce the motion blur to something acceptable for a calibration method, I might need to switch to a simulated environment for my experiments. Toby was okay with this, but I will have to discuss this as well with Arnoud.

References

-

Jorge Lobo and Jorge Dias.

Relative pose calibration between visual and inertial sensors.

The International Journal of Robotics Research, 26(6):561–575,

June 2007.

[ bib | .pdf | DOI ]

@article{lobo2007relative, title = {Relative pose calibration between visual and inertial sensors}, author = {Lobo, Jorge and Dias, Jorge}, journal = {The International Journal of Robotics Research}, volume = {26}, number = {6}, pages = {561--575}, month = jun, year = {2007}, publisher = {SAGE Publications}, url = {http://ijr.sagepub.com/content/26/6/561.full.pdf}, doi = {10.1177/0278364907079276} } -

Faraz M. Mirzaei and Stergios I. Roumeliotis.

A kalman filter-based algorithm for imu-camera calibration:

Observability analysis and performance evaluation.

Robotics, IEEE Transactions on, 24(5):1143–1156, October 2008.

[ bib | DOI ]

@article{mirzaei2008kfbased, author = {Mirzaei, Faraz M. and Roumeliotis, Stergios I.}, journal = {Robotics, IEEE Transactions on}, title = {A Kalman Filter-Based Algorithm for IMU-Camera Calibration: Observability Analysis and Performance Evaluation}, year = {2008}, month = oct, volume = {24}, number = {5}, pages = {1143-1156}, abstract = {Vision-aided inertial navigation systems (V-INSs) can provide precise state estimates for the 3-D motion of a vehicle when no external references (e.g., GPS) are available. This is achieved by combining inertial measurements from an inertial measurement unit (IMU) with visual observations from a camera under the assumption that the rigid transformation between the two sensors is known. Errors in the IMU-camera extrinsic calibration process cause biases that reduce the estimation accuracy and can even lead to divergence of any estimator processing the measurements from both sensors. In this paper, we present an extended Kalman filter for precisely determining the unknown transformation between a camera and an IMU. Contrary to previous approaches, we explicitly account for the time correlation of the IMU measurements and provide a figure of merit (covariance) for the estimated transformation. The proposed method does not require any special hardware (such as spin table or 3-D laser scanner) except a calibration target. Furthermore, we employ the observability rank criterion based on Lie derivatives and prove that the nonlinear system describing the IMU-camera calibration process is observable. Simulation and experimental results are presented that validate the proposed method and quantify its accuracy.}, keywords = {Kalman filters;calibration;computer vision;image sensors;inertial navigation;observability;state estimation;Kalman filter-based algorithm;inertial measurement unit;observability analysis;observability rank criterion;performance evaluation;vision-aided inertial navigation systems;Extended Kalman filter;Lie derivatives;inertial measurement unit (IMU)-camera calibration;observability of nonlinear systems;vision-aided inertial navigation}, doi = {10.1109/TRO.2008.2004486}, issn = {1552-3098} } -

Gabriele Bleser and Didier Stricker.

Advanced tracking through efficient image processing and

visual-inertial sensor fusion.

In Virtual Reality Conference, pages 137–144. IEEE, 2008.

[ bib | DOI ]

@inproceedings{bleser2008advanced, author = {Bleser, Gabriele and Stricker, Didier}, booktitle = {Virtual Reality Conference}, title = {Advanced tracking through efficient image processing and visual-inertial sensor fusion}, year = {2008}, pages = {137--144}, organization = {IEEE}, doi = {10.1109/VR.2008.4480765} } -

Jeroen D. Hol.

Sensor fusion and calibration of inertial sensors, vision,

ultrawideband and GPS.

PhD thesis, Linköping University, Institute of Technology, 2011.

[ bib | .pdf ]

@phdthesis{hol2011sensor, title = {Sensor fusion and calibration of inertial sensors, vision, ultrawideband and GPS}, author = {Hol, Jeroen D.}, year = {2011}, school = {Link{\"o}ping University, Institute of Technology}, url = {http://user.it.uu.se/~thosc112/team/hol2011.pdf} } -

Jonathan Kelly and Gaurav S Sukhatme.

Visual-inertial sensor fusion: Localization, mapping and

sensor-to-sensor self-calibration.

The International Journal of Robotics Research, 30(1):56–79,

2011.

[ bib | DOI ]

@article{kelly2011visualinertial, author = {Kelly, Jonathan and Sukhatme, Gaurav S}, title = {Visual-Inertial Sensor Fusion: Localization, Mapping and Sensor-to-Sensor Self-calibration}, volume = {30}, number = {1}, pages = {56-79}, year = {2011}, doi = {10.1177/0278364910382802}, abstract = { Visual and inertial sensors, in combination, are able to provide accurate motion estimates and are well suited for use in many robot navigation tasks. However, correct data fusion, and hence overall performance, depends on careful calibration of the rigid body transform between the sensors. Obtaining this calibration information is typically difficult and time-consuming, and normally requires additional equipment. In this paper we describe an algorithm, based on the unscented Kalman filter, for self-calibration of the transform between a camera and an inertial measurement unit (IMU). Our formulation rests on a differential geometric analysis of the observability of the camera—IMU system; this analysis shows that the sensor-to-sensor transform, the IMU gyroscope and accelerometer biases, the local gravity vector, and the metric scene structure can be recovered from camera and IMU measurements alone. While calibrating the transform we simultaneously localize the IMU and build a map of the surroundings, all without additional hardware or prior knowledge about the environment in which a robot is operating. We present results from simulation studies and from experiments with a monocular camera and a low-cost IMU, which demonstrate accurate estimation of both the calibration parameters and the local scene structure. }, url = {http://ijr.sagepub.com/content/30/1/56}, eprint = {http://ijr.sagepub.com/content/30/1/56.full.pdf+html}, journal = {The International Journal of Robotics Research} } -

Paul Furgale, Joern Rehder, and Roland Siegwart.

Unified temporal and spatial calibration for multi-sensor systems.

In Proceedings of the IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS), pages 1280–1286, November 2013.

[ bib | DOI ]

@inproceedings{furgale2013unified, title = {Unified Temporal and Spatial Calibration for Multi-Sensor Systems}, author = {Furgale, Paul and Rehder, Joern and Siegwart, Roland}, booktitle = {Proceedings of the {IEEE/RSJ} International Conference on Intelligent Robots and Systems ({IROS})}, year = 2013, location = {Tokyo, Japan}, month = nov, pages = {1280-1286}, keywords = {maximum likelihood estimation;robots;sensor fusion;state estimation;IMU calibration;continuous-time batch estimation;inertial measurement unit;maximum likelihood estimation;multisensor systems;robotics;sensor fusion;spatial calibration;spatial displacement;spatial transformation;state estimation;time offsets;unified temporal calibration;Calibration;Cameras;Estimation;Measurement uncertainty;Sensors;Splines (mathematics);Time measurement}, doi = {10.1109/IROS.2013.6696514}, issn = {2153-0858} }